Eryka Dellenbach's Virtual Ventriloquism

Dance inside of a HTC Vive HDM Headset

December 14th, 2016

This was one of the more abstract, conceptual, and elegant pieces of the show. It directly confronted ways in which the intrinsically personal experience of VR can be presented to an audience and what that means about the idea of 'play' and 'performance.' Instead of a marionette being pulled by a string, Eryka's body was confined to the length of the umbilical-like cord of the VR headset. When she tugged on her own string, it affected the head of the puppet which looked down upon her.

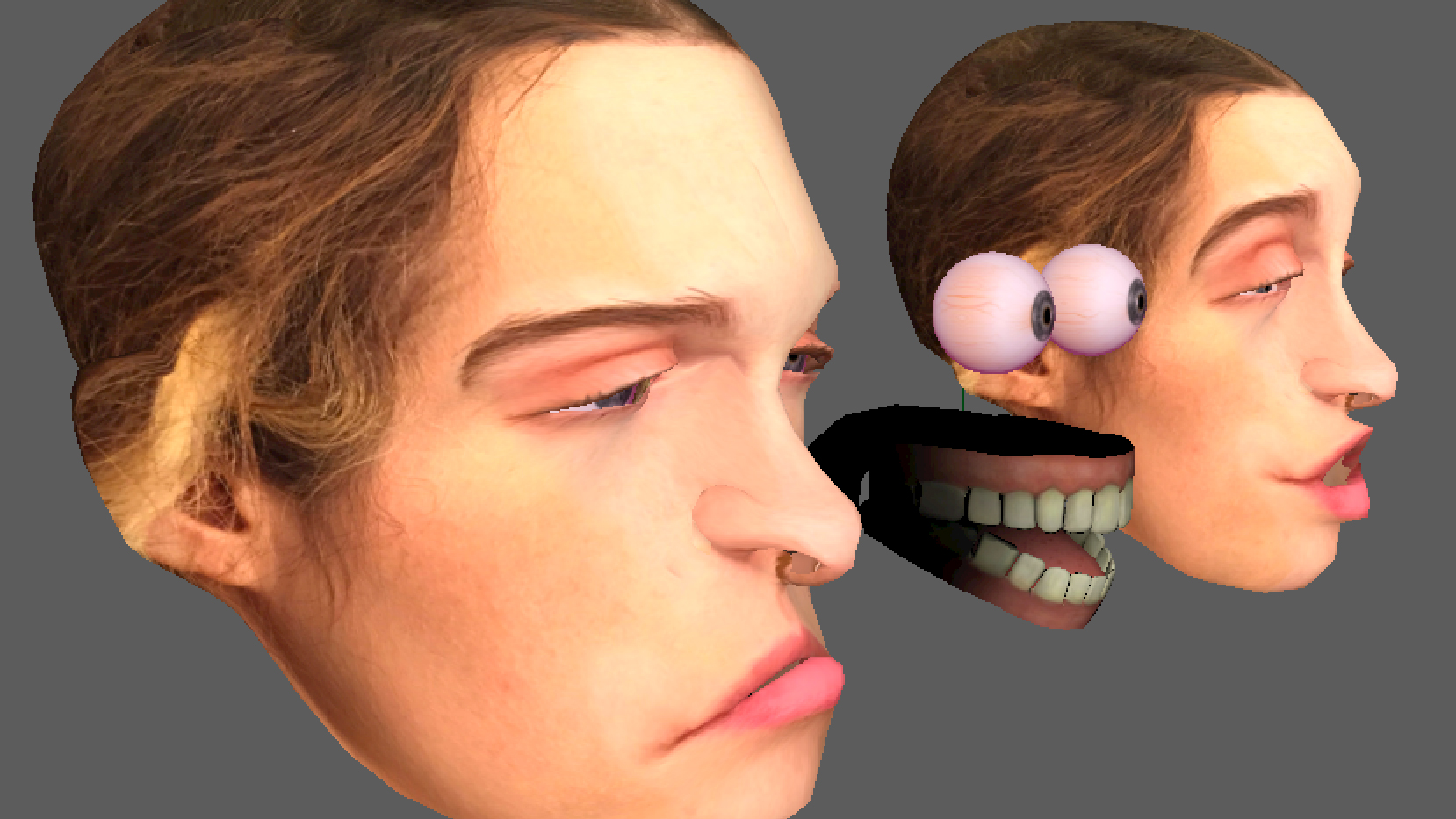

The puppet was Eryka's 3d modeled face. The technical process was for Eryka's physical position on the stage would manipulate the puppet on two separate axis, as well as guide it's orientation. What is accomplished is the eerie effect of a magic portrait that follows the performer around the room.

How was Eryka's face modeled? At first tried I captured a high poly version of Eryka's face with 1233dcatch. Eryka's vision involved a transition between an embryonic texture and a detailed adult self portrait for one axis and two different expressions ( Lust and Rage ) for another axis. The high-poly 3d scan proved to be a little too cumbersome to actually animate the expressions, so I ended up throwing it away after using it as a size template and symmetrically hand modeled in Maya.

This symmetrical model allowed me to make two blendshapes of the two different expressions.

These blend shapes are attached to an .fbx and are accessible within Unity.

public GameObject faceObject;

private Vector3 mousePosition;

private SkinnedMeshRenderer faceRend;

private Mesh faceMesh;

private Material faceMaterial;

void Start () {

setupMeshes();

}

void setupMeshes(){

GameObject face = faceObject.transform.Find("face").gameObject;

faceRend = face.GetComponent();

}

...

Shader "Unlit/UnlitAlphaWithFade"

{

Properties

{

_Color ("Color Tint", Color) = (1,1,1,1)

_MainTex ("Base (RGB) Alpha (A)", 2D) = "white"

}

Category

{

Lighting On

ZWrite On

Cull back

Blend SrcAlpha OneMinusSrcAlpha

Tags {Queue=Transparent}

SubShader

{

Pass

{

SetTexture [_MainTex]{

ConstantColor [_Color]

Combine Texture * constant

}

}

}

}

}

Here's the transparent shader.

void blendTexture(Vector3 pos){

float alpha = pos.x;

Color color = faceMaterial.color;

faceMaterial.color = new Color(color.r, color.g, color.b, alpha);

}

The alpha can then be manipulated with the alpha channel of the color property of the material.

void blendExpression(Vector3 pos){

float blendY;

if(pos.y > .5f){

blendY = (pos.y - .5f) * 200f;

blendLust(blendY);

} else {

blendY = (.5f - pos.y) * 200f;

blendRage(blendY);

}

}

void blendLust(float blend){

faceRend.SetBlendShapeWeight(0, blend);

noFaceRend.SetBlendShapeWeight(0, blend);

}

void blendRage(float blend){

faceRend.SetBlendShapeWeight(1, blend);

noFaceRend.SetBlendShapeWeight(1, blend);

}

Vector3 getRelativePosition(Vector3 mousePosition){

float newX = Input.mousePosition.x / Screen.width;

float newY = Input.mousePosition.y / Screen.height;

Vector3 relativePosition = new Vector3(

newX,

newY,

mousePosition.z

);

return relativePosition;

}

The relative position calculated by x & y mouse position was then easily translated to the x & z (of a bird's eye view) of the vive headset.

void Update(){

vivePosition = viveHeadset.transform.position;

Vector3 relativePosition = getRelativePosition(vivePosition, groundPlane);

lookAtVive(vivePosition);

blendExpression(1f - relativePosition.x);

blendTexture(relativePosition.z);

}

Vector3 getRelativePosition(Vector3 vivePosition, Collider groundPlane){

Bounds b = groundPlane.bounds;

float xSize = b.max.x - b.min.x;

float zSize = b.max.z - b.min.z;

float relativeX = Mathf.Abs((b.max.x - vivePosition.x)/b.size.x);

float relativeZ = Mathf.Abs((b.max.z - vivePosition.z)/zSize);

Vector3 relativePosition = new Vector3(

relativeX,

0f,

relativeZ

);

return relativePosition;

}

Anything requiring relationships with physical space will require some calibration. I quickly made an adhoc 'virtual floor tape' by making an eyeballed 3d ground plane within the virtual environment that mapped to specific dimensions of the physical stage. ( Tip: I used the vive controller positions to help find the corners of the ground plane ) The dimensions of the ground plane feed into the parameters for where the puppet is looking. This ground plane wasn't seen to audience members because I made a stationary virtual camera that was focused on the head. I then made a background green as a quick solution for montaging so we could chromakey it within Resolume to prepare for transitions in between sets. I can only imagine what it was like to dance technically blindfolded within this field of white and bright green sky during the performance, knowing that over a hundred people ( and a giant puppet head ) were watching your every move.